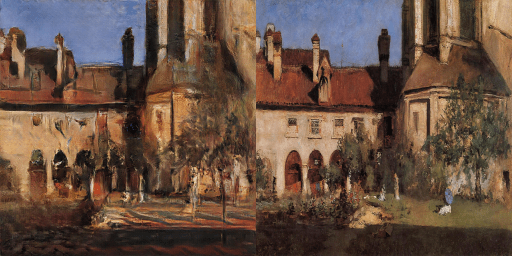

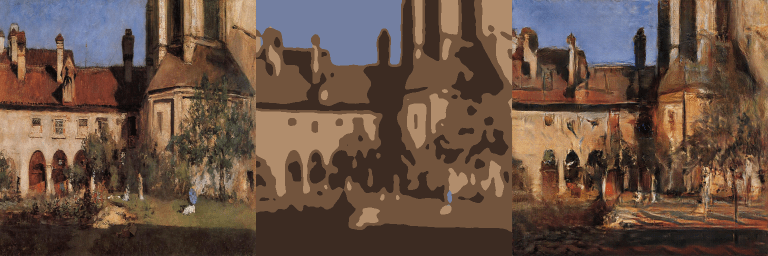

The following are examples of the art generated by one of my later(I’ve continued working on this long after I turned the project in) Pix2Pix models(trained with noise). As you can see, it’s not always the best; but, I do think there are some genuinely good results mixed throughout, which gives me hope that I can get better results with a better training regimen.

Abstract –

We investigate the role of noise in the training process of an image-to-image style transference GAN. We will do this by comparing two models trained on the same training data, a set of semantic maps, and their target image; however, in one of the models, we will add noise to the semantic maps prior to the training process. We will then apply our models to a semantic map that was not used in the training process and measure the Euclidean distance, treating the RGB pixel values as spacial coordinates, of the generated image from its target image to compare the accuracy of our two models.

Presentation and Paper–

Results –

Code and Data Set –

Code: https://github.com/MattTucker22689/pix2pix-GAN-Project

The data set: https://archive.org/details/wikiart-dataset

Related Papers –